Team!

Storage, storage, storage. Good ol’ storage. Can’t live without it and…well, that’s about it. If you’re relying on SAN storage for your VMware infrastructure, then I have a few questions for you:

- Why aren’t you using, or at least considering, vSAN? 😀 (ping me directly and we can have a nice chat about it!)

- Are you optimizing your hosts for best throughput to your storage?

I’ve worked with a lot of Admins who bring a new host online, slap some basic bare necessity configurations on it, have it zoned in for the necessary LUNs, and then start chomping away at those compute resources. Well, that works, and it’ll get the job done, but I like to try to optimize and get the most out my infrastructure. Each storage vendor has their own set of best practices surrounding configurations of the ESXi hosts. These configurations usually entail creating your storage claim rules.

Purpose

The purpose of the claim rules is to set which multipathing module will be used with a specific storage device and the type of support provided from the host. There are two types of plugins available from VMware – the Native Multipathing Plugin (NMP) and the High-Performance Plugin (HPP). It’s also possible that a vendor may require or suggest that you use a third-party plugin for their storage devices. There are also two types of sub-plugins – Storage Array Type Plugins (SATPs) and Path Selection Plugins (PSPs), which can also be built-in from VMware or provided by the vendor.

Path Selection Policies (PSPs)

The Path Selection Policy of a host dictates how it uses the paths available to communicate with the backend storage device. Native in ESXi are three PSPs – Fixed, Most Recently Used, and Round Robin. The names pretty much represent how the policy operates, but let’s run through them a little bit anyway:

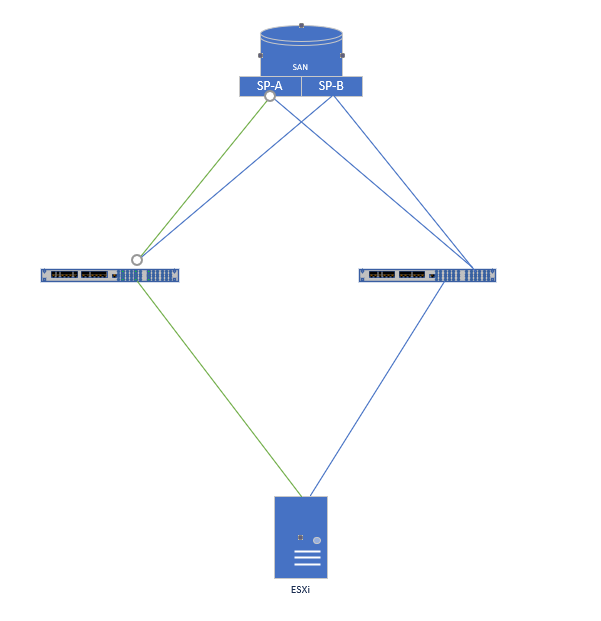

Fixed – This PSP will select the first working path at system boot and stays on this path until A) you reboot and it picks a different preferred path, B) you explicitly designate a preferred path, or C) this path dies. Here’s a little red tape for you – if the path was automatically selected and it dies, the host will roll over to another available path. However, if you explicitly selected this path and it dies, it will remain the preferred path!

Assuming that you have multiple links from your ESXi host going to your fiber channel switches, and multiple links from your FC switches to each Storage Processor in your SAN (and they are active/active) you can see this isn’t a very effective use of your storage infrastructure. Worth stating again, if you explicitly selected that green path and it goes down, you’ll find yourself in an All Paths Down (APD) situation for that host.

Most Recently Used – It means just that. The host will stick on the most recently used path, until it becomes unavailable. If this occurs, the host will automatically choose another lane to the storage device but will not fail back to the original path if it becomes available again. The visualization is like that of the image depicting Fixed above.

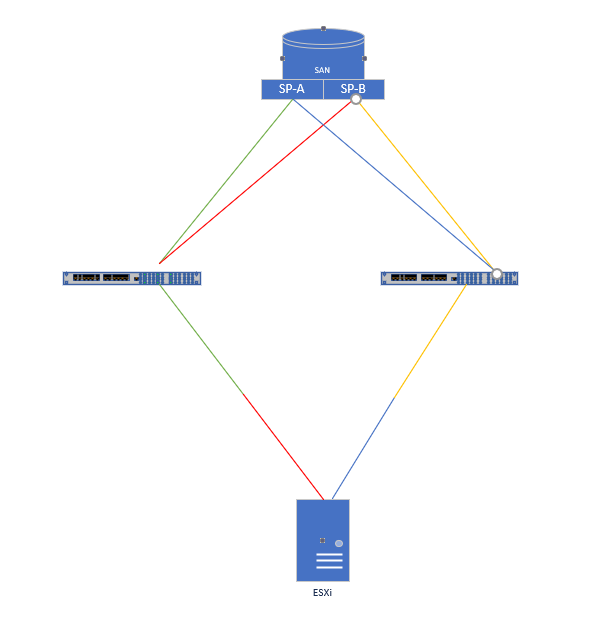

Round Robin – With the host’s magical algorithm it will bounce between all active paths in an active-passive situation, or all available paths with an active-active. As you can see below, this seems like a better use of your infrastructure because all channels are getting used at some point.

Storage Array Type Plug-Ins (SATPs)

These are simple to explain. They are responsible for operations that include monitoring the health and reporting changes in the state of paths and performing actions for fail-over of paths. There are four built-in SATPs:

VMW_SATP_LOCAL – Built-in plug-in used for local, direct-attached storage.

VMW_SATP_DEFAULT_AA – Built-in plug-in used for active-active arrays.

VMW_SATP_DEFAULT_AP – Built-in plug-in used for active-passive arrays.

VMW_SATP_ALUA – Built-in plug-in used with ALUA-compatible arrays.

Vendor Best Practices

As previously mentioned, each storage vendor their own recommendations on which SATP, PSP, and options to use. Always consult their online best practices guides for definitive answers, but, for the most part, most of the vendors that I have worked with rely on VMW_SATP_ALUA with VMW_PSP_RR. They also recommend changing the ‘IOPs’ option from a default of 1,000 to 1. What this does in conjunction with the PSP is send 1 IOP, instead of 1,000, down a particular path before using Round Robin to change paths and send out another IOP essentially making more efficient use of your pathing which usually leads to a performance increase. Another reason behind the change is path failures. Sending 1 IOP down a path will reduce the amount of time it takes for it to determine that there’s a failed path. Some vendors report this time span is reduced from 10s of seconds to sub-10 seconds after modifying this option.

Modifying Your Hosts

The easiest way to accomplish the mods mentioned above is via CLI as you can only modify the PSP in the WebGUI. Not only would you have to go LUN by LUN, it would only affect existing LUNs not any presented going forward.

After finding your vendor’s best practices, jump into an SSH session on your host. In this example, we’re backed by a Dell EMC Unity SAN (link to their best practices can be found at the end of the article). We’ll execute the following command:

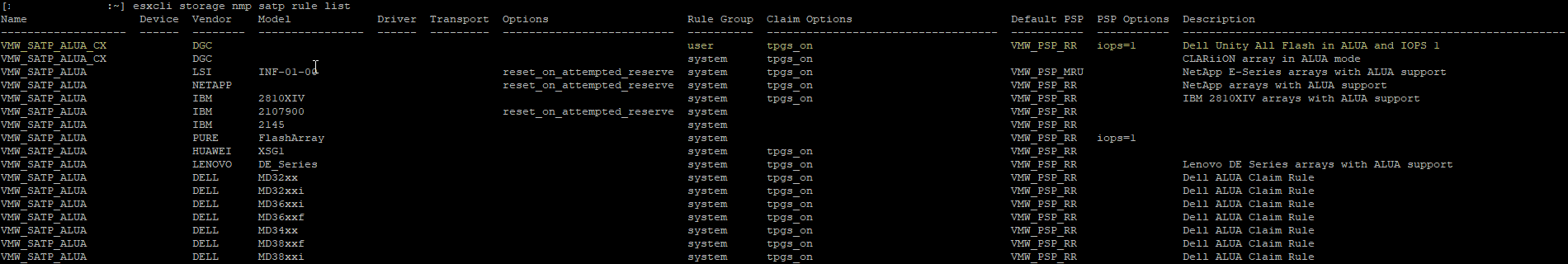

esxcli storage nmp satp rule add --satp "VMW_SATP_ALUA_CX" --vendor "DGC" --psp "VMW_PSP_RR" --psp-option "iops=1" --claim-option="tpgs_on" --description "Dell Unity All Flash in ALUA and IOPS 1"

What does this do for us? Well, technically, nothing, except if we present additional LUNs from the Dell EMC Unity. Essentially, what we’ve done is create a SATP claim rule that takes any NEW LUNs with a vendor of ‘DGC’ and sets the SATP to ‘VMW_SATP_ALUA_CX”, the PSP to ‘VMW_PSP_RR’, and sets the IOPS sent down each path before changing to 1. We also give the rule a little description so that we know what it is when we list our rules. If we execute the following, we can see that the rule exists and that it is part of the ‘user’ rule group, meaning it was created by, well, a user.

After a reboot, any LUNs presented from the Unity to this host will adhere to this claim rule. “But what about existing LUNs?” you ask? Well, for those we’ll have to modify those individually. Luckily, there’s a simple way to do it – scripting!

for i in `esxcfg-scsidevs -c | grep DGC | awk '{print $1}'`; do esxcli storage nmp device set --device $i --psp VMW_PSP_RR; esxcli storage nmp psp roundrobin deviceconfig set --type=iops --iops=1 --device=$i; done

This is going to take the output of esxfg-scsidevs and only return the lines with DGC in them, then trim out everything except the first column, which is the list of WWNs. This output will be used as the subset of objects in a For Each loop that will change the PSP on each LUN, then also modify the IO operations limit from the default of 1,000 to 1. Voila! Give the host a quick reboot and you’re all set!

Links

Just remember that while a lot of the vendors may have the same recommendations, it’s always best to check their documentation or open a support case with them instead of assuming. I went ahead and gathered the “Best Practices on VMware” links from some of the storage vendors that I encounter often in the field. Check out the “Multipathing” section of each for details about which SATP, PSP, and related options should be used with your storage.

HPe 3Par – https://support.hpe.com/hpesc/public/docDisplay?cc=us&docId=emr_na-c03290624&lang=en-us

HPe Primera – https://support.hpe.com/hpesc/public/docDisplay?docId=a00088903en_us

Thanks for reading. If you enjoyed the post make sure you check us out at dirmann.tech and follow us on LinkedIn, Twitter, Instagram, and Facebook!

References:

Paul Dirmann (vExpert PRO*, vExpert***, VCIX-DCV, VCAP-DCV Design, VCAP-DCV Deploy, VCP-DCV, VCA-DBT, C|EH, MCSA, MCTS, MCP, CIOS, Network+, A+) is the owner and current Lead Consultant at Dirmann Technology Consultants. A technology evangelist, Dirmann has held both leadership positions, as well as technical ones architecting and engineering solutions for multiple multi-million dollar enterprises. While knowledgeable in the majority of the facets involved in the information technology realm, Dirmann honed his expertise in VMware’s line of solutions with a primary focus in hyper-converged infrastructure (HCI) and software-defined data centers (SDDC), server infrastructure, and automation. Read more about Paul Dirmann here, or visit his LinkedIn profile.